目录

What is a neural network?

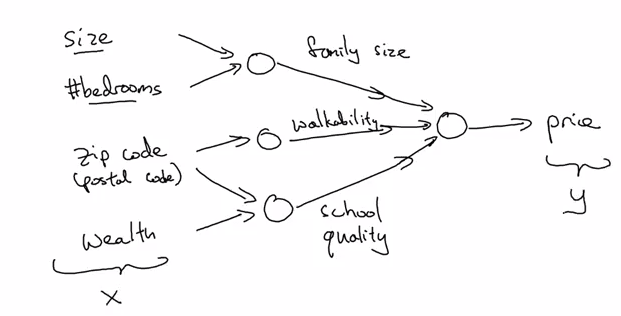

Example: housing price prediciton.

Each neuron: ReLU function

Stacking multiple layers of neurons: hidden layers are concepts more general than input layer — found automatically by NN.

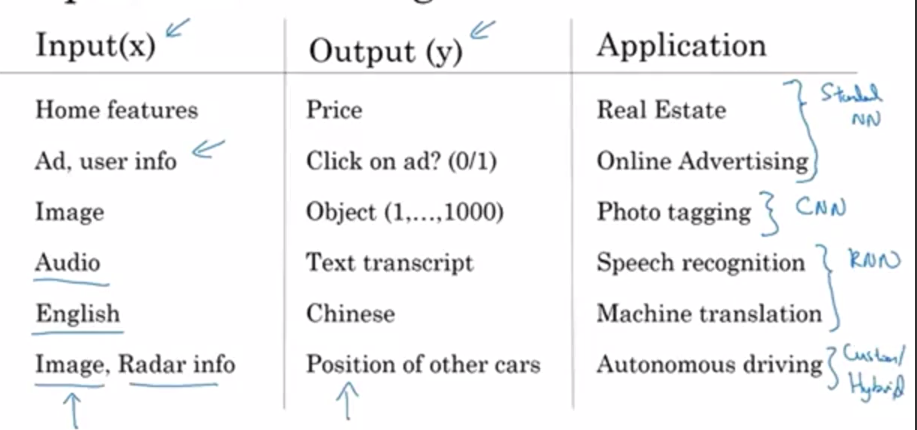

Supervised Learning with Neural Networks

supervised learning: during training, always have output corresponding to input.

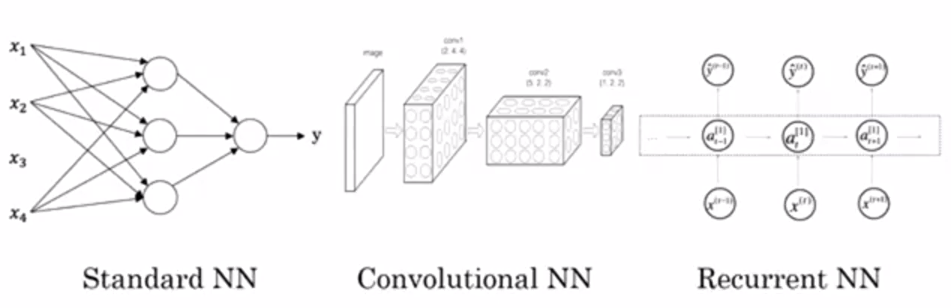

Different NN types are used for different problems:

structured data: database, each feature/column has a well-defined meaning. unstructured data: audio/image/text, no well-defined meaning for pixels/tokens

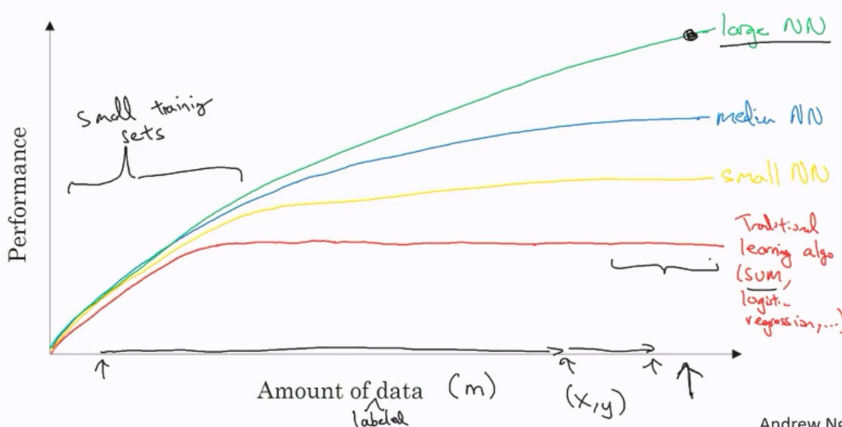

Why is Deep Learning taking off?

scale drives deep learning progress. (scale: both of NN and of data)

trandition methods: pleateaus as amount of data grows further.

NN: grows with data.

- data scales up

- computation faster

- new algorithms, e.g. from sigmoid to ReLU, which in turn speeds up computation too.

About this course

This course: implementing NN.

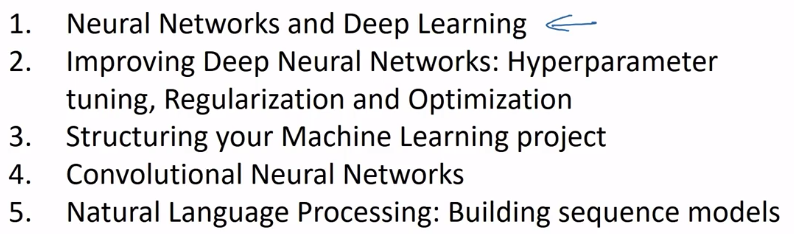

Part 1 of series «Andrew Ng Deep Learning MOOC»:

- [Neural Networks and Deep Learning] week1. Introduction to deep learning

- [Neural Networks and Deep Learning] week2. Neural Networks Basics

- [Neural Networks and Deep Learning] week3. Shallow Neural Network

- [Neural Networks and Deep Learning] week4. Deep Neural Network

- [Improving Deep Neural Networks] week1. Practical aspects of Deep Learning

- [Improving Deep Neural Networks] week2. Optimization algorithms

- [Improving Deep Neural Networks] week3. Hyperparameter tuning, Batch Normalization and Programming Frameworks

- [Structuring Machine Learning Projects] week1. ML Strategy (1)

- [Structuring Machine Learning Projects] week2. ML Strategy (2)

- [Convolutional Neural Networks] week1. Foundations of Convolutional Neural Networks

- [Convolutional Neural Networks] week2. Deep convolutional models: case studies

- [Convolutional Neural Networks] week3. Object detection

- [Convolutional Neural Networks] week4. Special applications: Face recognition & Neural style transfer

- [Sequential Models] week1. Recurrent Neural Networks

- [Sequential Models] week2. Natural Language Processing & Word Embeddings

- [Sequential Models] week3. Sequence models & Attention mechanism

Disqus 留言