Course intro

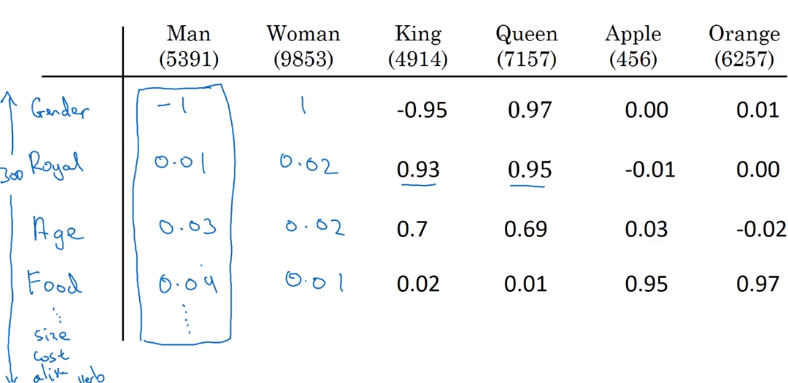

Word Meaning and Representation

denotational semantics

wordnet (nltk): word meanings, synonym, relationships, hierarchical

pb: missing nuance, missing new meanings, required human labor, can't compute word similarity

Traditional NLP (untill 2012):

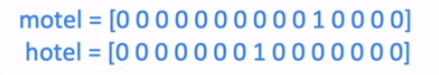

- each words are discrete symbols — "localist representation"

- use one-hot vectors for encoding

- pbs with one-hot vecotrs:

- large ...